3D XPoint: A Memory Game Changer

The new memory technology from Intel and Micron will bring massive changes to storage systems with major repercussions in the data center.

November 12, 2015

When Intel and Micron unveiled their 3D XPoint memory technology this summer, it was déjà vu: Here we go again, another whoopty-doo computer technology that’s supposed to be faster than a speeding bullet! But this time around, there's a reason to get excited; this really is a big deal. 3D XPoint is more than just faster flash technology -- it has far-ranging implications for enterprise data centers and mobile devices.

According to Intel, 3D XPoint is the culmination of a number of technology initiatives such as Non-Volatile Memory Express (NVMe), which it pushed with Micron. NVMe is a queue-based IO system that drastically reduces IO overhead, consolidates interrupts so saving state-switching, and supports as many as 64,000 queues to allow tying them to cores or applications for even better overhead.

One of the two forms of 3D XPoint memory will use NVMe over either PCIe or OmniPath, the new fast bus Intel is pushing. This puts all the makers of NAND flash NVMe solutions, including SanDisk, on notice that they are about to be overtaken by much faster drives. Likewise, all-flash arrays are heading for a major technology refresh, which will be interesting from the perspective of who will be nimble versus ponderously slow. For example, will Violin Memory jump ahead because its AFAs are smart and easy to change, for instance? Will EMC fall behind due to the confusion caused by Dell's proposed acquisition?

The second form of 3D XPoint, which will come out in 2016, is a whole different proposition. This is a DIMM-based solution with memory bus access. Latency is slower than DRAM, but not much slower, and certainly much faster than the NVMe version. Micron, incidentally, has just released a NAND flash-DIMM product that positions it for a 3D XPoint DIMM product next year.

Such speed would be a game changer for in-memory databases. 3D XPoint could be used as a memory extender as well as a fast persistent data store that’s closer to the CPU than NVMe over PCIe. But there’s more: I’m speculating that we can expect the 3D XPoint DIMM memory to be byte addressable, though Intel is thin on details of the DIMM solution. This really is a big change in the way systems operate.

Now, a byte-addressable persistent DRAM memory is potentially a huge speed boost in applications such as databases. Instead of going through the file software stack and then sending 4K bytes to a disk, the app can literally write one byte in a CPU operation. This so simplifies the processes of keeping safe copies of the database parameters that we are looking at factors perhaps as high as 100x in overall performance compared with current in-memory databases. Read operations speed up just as much, with exactly what is needed being read by CPU memory instructions.

The database mavens such as Oracle get an edge from this technology. They can hide the software changes to increase the speed behind existing SQL operations, so time to market for user applications will be quite fast.

To make the software changes possible, Intel has to alter its compiler to support a Persistent Memory address class and other changes such as the Link Editor to get it to the user application level. Here is where the rubber meets the road a bit. The new constructs remove the need for routines using asynchronous IO to write out to disk. These could be replaced by simplified IO constructs, but a byte-writable persistent memory doesn’t need any of this overhead. Any byte can be written or read directly from any routine in the app.

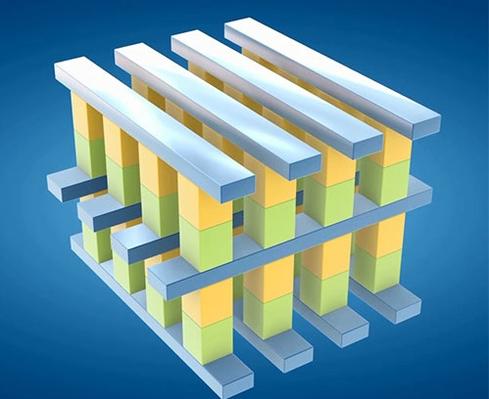

Figure 1:  3D XPoint Technology

3D XPoint Technology

Clearly, this changes apps drastically, and will take time, but the ability to save-and-continue at blinding speed can’t be ignored. It will make apps written to that paradigm much faster. Picking some parts of the app, such as memcached operations that are high usage, and rewriting them for persistent memory are the easiest changes.

The impact of this big speed-up will be felt strongly in the legacy systems area. Already under strong pressure to change, legacy code can’t transition to the new world of direct-write persistent memory. It will fall much further behind in cost and performance, and that is likely to crack open the floodgates of replacement at last. It won’t happen overnight, but the pace of replacement will be much higher than it currently is; SaaS and database solutions using the direct-write approach will be the likely winners.

If all this isn’t enough, Micron and Intel have been developing a different 3D memory system. Hybrid Memory Cube (HMC) is a way to bring the CPU and DRAM close to each other on a single module. This closeness reduces power losses dramatically and eliminates a large chunk of transistors to drive the interfaces. With much higher memory speeds, over 300 GBps, HMC is an ideal candidate for a hybrid of DRAM and 3D XPoint.

If Intel pulls off this trifecta of approaches, it will be in a position to own most of the silicon value-add in any all-solid-state system. But what systems? HMC and direct-write memories work just as well in smart phones as in servers. Likely we’ll see both plays, with smart phones getting the benefit of savings in weight and battery life as well as operating speed. Servers, with containers, could have many more cores and host a lot more VMs.

The laws of unintended consequences could be interesting, though. Smartphones will need time to evolve a new set of apps and features to do justice to the new approach, while we’ll need a lot fewer servers to do the IT job. The combination of containers and direct write memory could be a 90% reduction in server units bought, stopping data center expansion dead, even in the cloud. Against that, the replacement of legacy systems could bring a lot of churn to existing operations.

HP and SanDisk responded to the hoopla over 3D XPoint with a release about their nonvolatile DIMMs. While they looked a bit unprepared, these two companies at least see the potential of NVDIMMs.. The next half decade is likely to be the most interesting in computer systems design in a long time!

About the Author

You May Also Like