OpenStack Neutron Components and ConceptsOpenStack Neutron Components and Concepts

Learn about the cloud networking service in this excerpt from Packt's "OpenStack: Building a Cloud Environment."

July 14, 2017

Neutron replaced an older version of the network service called Quantum, which was introduced as a part of the Folsom release of OpenStack. Before Quantum came into the picture, the networking of the Nova components was controlled by Nova networking, a subcomponent of Nova. The name of the networking component was changed from Quantum to Neutron due to a trademark conflict (Quantum was a trademark of a tape-based backup system).

While Neutron is the way to go if you need only simple networking in your cloud, you can still choose to use the Nova network feature and ignore the Neutron service completely. But if you do go the Neutron route, you can easily offer several services, such as load balancing as a service (using HA proxy) and VPN as a service (openswan).

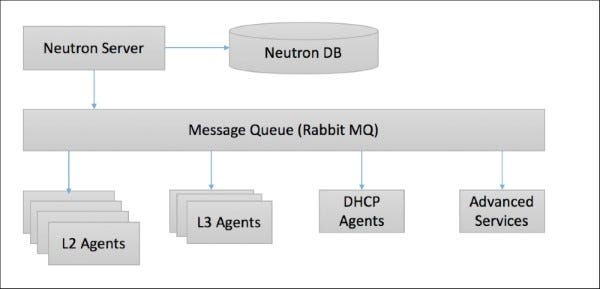

Neutron has a component on the controller node called the neutron server, along with a bunch of agents and plugins that communicate with each other using a messaging queue. Depending on the type of deployment, you can choose the different agents that you want to use.

Some plugins that are available today with Neutron include but are not limited to the following:

NEC OpenFlow

Open vSwitch

Cisco switches (NX-OS)

Linux bridging

VMware NSX

Juniper OpenContrail

Ryu network OS

PLUMgrid Director plugin

Midokura Midonet plugin

OpenDaylight plugin

You can choose to write more of these, and the support is expanding every day. So, by the time you get on to implementing it, maybe your favorite device vendor will also have a Neutron plugin that you can use.

Tip

In order to view the updated list for plugins and drivers, refer to the OpenStack wiki page.

Architecture of Neutron

The architecture of Neutron is simple, but it is with the agents and plugins where the real magic happens! Neutron architecture has been presented in the following diagram:

Neutron-1.jpg

Let's discuss the role of the different components in a little detail.

The Neutron server

The function of this component is to be the face of the entire Neutron environment to the outside world. It essentially is made up of three modules:

REST service: The REST service accepts API requests from the other components and exposes all the internal working details in terms of networks, subnets, ports, and so on. It is a WSGI application written in Python and uses port 9696 for communication.

RPC service: The RPC service communicates with the messaging bus and its function is to enable a bidirectional agent communication.

Plugin: A plugin is best described as a collection of Python modules that implement a standard interface, which accepts and receives some standard API calls and then connects with devices downstream. They can be simple plugins or can implement drivers for multiple classes of devices.

The plugins are further divided into core plugins, which implement the core Neutron API, which is Layer 2 networking (switching) and IP address management. If any plugin provides additional network services, we call it the service plugin -- for example, Load Balancing as a Service (LBaaS), Firewall as a Service (FWaaS), and so on.

As an example, Modular Layer 2 (ML2) is a plugin framework that implements drivers and can perform the same function across ML2 networking technologies commonly used in datacenters. We will use ML2 in our installation to work with Open vSwitch (OVS).

L2 agent

The L2 agent runs on the hypervisor (compute nodes), and its function is simply to wire new devices, which means it provides connections to new servers in appropriate network segments and also provides notifications when a device is attached or removed. In our install, we will use the OVS agent.

L3 agent

The L3 agents run on the network node and are responsible for static routing, IP forwarding, and other L3 features, such as DHCP.

Understanding the basic Neutron process

Let's take a quick look at what happens when a new VM is booted with Neutron. This shows all the steps that take place during the Layer 2 stage:

Boot VM start.

Create a port and notify the DHCP of the new port.

Create a new device (virtualization library – libvirt).

Wire port (connect the VM to the new port).

Complete boot.

NEXT page: Networking concepts in Neutron

Networking concepts in Neutron

It is also a good idea to get familiar with some of the concepts that we will come across while dealing with Neutron, so let's take a look at some of them. The networking provides multiple levels of abstraction:

Network: A network is an isolated L2 segment, analogous to a VLAN in the physical networking world.

Subnet: This is a block of IP addresses and the associated configuration state. Multiple subnets can be associated with a single network (similar to secondary IP addresses on switched virtual interfaces of a switch).

Port: A port is a connection point to attach a single device, such as the NIC of a virtual server, to a virtual network. We have seen physical ports to which we plug our laptops or servers into them; the virtual ones are quite analogous to those, with the difference that these ports belong to a virtual switch and we connect it using a virtual wire to our servers.

Router: A router is a device that can route traffic between different subnets and networks. Any subnets on the same router can talk to each other without a routing table if the security groups allow the connection.

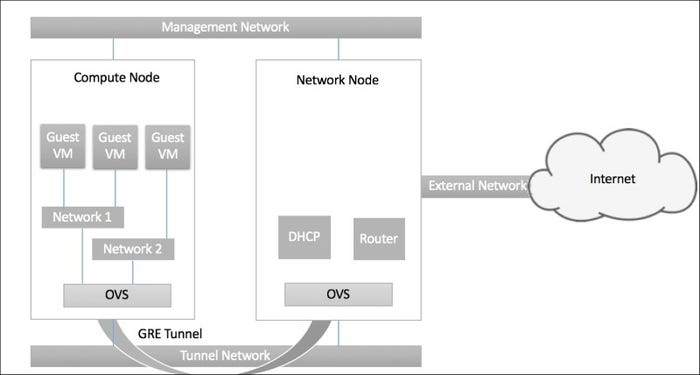

In order to express this better, let's take a look at the following diagram, which shows the connectivity between the Compute node and Network nodes -- the management network is used by administrators to configure the nodes and other management activities (these networks and their purposes have been described in Chapter 1, An Introduction to OpenStack):

Neutron-2.jpg

The Tunnel Network exists between the compute nodes and the network node and serves to build a GRE Tunnel between the two. This GRE Tunnel, as we know, encapsulates different networks created on the compute nodes, so that the physical fabric doesn't see any of it. In our configurations, we will set up the VLAN ranges for this purpose.

The network node primarily performs the Layer 3 functionality, be it routing between the different networks or routing the networks to an external network using its external interface and the OVS bridge created on the Network Node. In addition, it also performs other L3-related functions such as firewalling and load balancing. It also terminates the elastic IPs at this level.

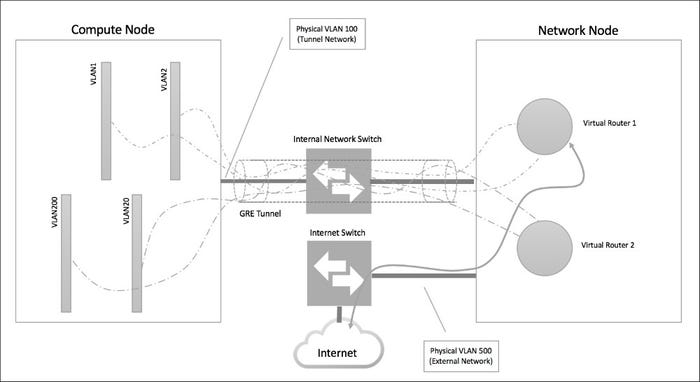

So let's take a look at how this would look in the real world:

Neutron-3.jpg

As you can see from the diagram, the physical network has absolutely no idea of the existence of the different VLANs, which will now be assigned to different tenants or the same tenant for different purposes. We can create as many VLANs as needed, and the underlying physical network will not be affected.

The virtual router in the network node will be responsible for routing between the different VLANs, and the router may also provide access to the Internet using another network interface in the network node (we call it the external network).

To summarize, ports are the configurations on the networks where the guest VM can be connected. The network is equivalent to the virtual switch VLAN in which there may be one or more subnets. It is on a layer 2 network domain. Different networks are connected to the router on the Network Node using a GRE Tunnel, and each network is encapsulated with a single VLAN ID for identification.

To learn more about OpenStack features, check out "OpenStack: Building a Cloud Environment," published by Packt and written by Alok Shrivastwa, Kevin Jackson, Cody Bunch, Egle Sigler, and Tony Campbell.

About the Author

You May Also Like