Ethernet Disk Drives: Will They Catch On?Ethernet Disk Drives: Will They Catch On?

Seagate and HGST have designed Ethernet drives, but can these products change the storage status quo?

September 2, 2014

It was a question drive makers had avoided for nearly the past two decades: Why not put Ethernet interfaces directly onto disk drives? The status quo was part of the reason. SCSI was too entrenched, and SAS/SATA took over from it relatively seamlessly. Ethernet didn’t help at the time since, at 100 Mbps and then 1 Gbps, it was a slower alternative.

Today, the speed issue is behind us. 10 GbE is about the same speed as SAS. One result is that we are finally seeing drives that natively speak Ethernet to the world arriving from the two major hard disk drive makers, Seagate and Western Digital's HGST subsidiary. Will Ethernet drives become mainstream storage? Let's first look at what these vendors have developed.

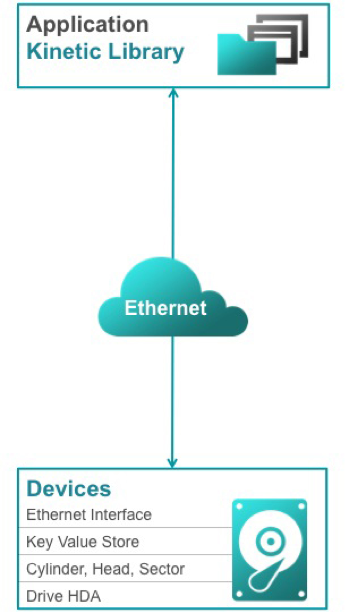

Seagate and HGST take very different approaches to the Ethernet drive. Seagate has chosen a brand-new "Kinetic" interface based on big-data constructs. It uses a key-data approach to storing at the object level on the drives. This Kinetic interface is an attempt to bypass the many layers of the current operating system file stack, while recognizing that much of data written today is at the object level, not the block level.

"Kinetic" interface based on big-data constructs. It uses a key-data approach to storing at the object level on the drives. This Kinetic interface is an attempt to bypass the many layers of the current operating system file stack, while recognizing that much of data written today is at the object level, not the block level.

Seagate is focused on the high-growth big-data space, with total storage needs expected to increase well over 100x in the next decade. By eliminating a layer of servers in a large-scale configuration, these drives should save both TCO and power.

HGST has a very different worldview by designing its Ethernet product with a Linux server as the presentation vehicle. This means, at least in theory, that a drive could be turned into a file server or an object store for Ceph or OpenStack Swift, for example, just by adding the appropriate app to the drive. As the number of drives increases, so does the compute power available to run the drives, which is a good story for scale-out configurations.

HGST's approach is reliant on its Linux “server” having enough power to do the job, which involves having extra DRAM space for file or object metadata, and a fast enough processor to process it. There are also significant support and security issues involved with drives that can be reprogrammed by users. It may well be that the best model for this type of drive will be a pre-configured appliance, such as a file server with software built in for data integrity, removing programmability from end users' hands.

In a sense, HGST has turned the idea of using servers for software-defined storage on its head, by using the drive as a server. But don’t expect to run Oracle on the drive CPU in the near future; there isn’t enough horsepower in the drive.

Seagate also sees the advantage of linear expansion of compute versus capacity by adding drives. It has an advantage over HGST in handling Hadoop-like unstructured data directly and with a very simple software stack in the OS, but applications and systems software need to change to take advantage of it. Kinetic will be included in upcoming releases of Ceph, for example. The Kinetic drives have an extra processor core for the key-data management.

To answer the question of whether Ethernet drives will become mainstream, we have to separate data transmission from work function. 25 GbE is on the near horizon and, frankly, Fiber Channel, SAS and SATA are being overtaken. There will be a point where Ethernet alternatives make more sense.

The million-dollar question is whether Ethernet drives can achieve enough performance and functionality to become the data plane in a software-defined storage environment. If they're limited to archive bulk hard-drives, then we’ll continue to see a broad spectrum of other solutions in storage. If the Ethernet approach can be economically migrated into arrays for the primary storage layer, then wholesale adoption is a possibility.

Seagate plans to migrate Kinetic into SSD in the future, which makes sense given the importance of key-data in analytics. Here. A simple stack should reduce finding and accessing an object to a single I/O, compared with the typical layered directory approach which today may involve several I/Os. This implies a positive impact on system-level performance.

With Kinetic generally available in the fourth quarter of this year, and HGST still uncommitted on dates, we are a long way from knowing the answers on performance at this point. We haven’t seen an end-to-end solution, and as a result, performance is an unknown quantity. Expect to pay a premium (at least for a while) for Ethernet drives.

One can speculate that the advent of inexpensive, multi-core ARM chips will allow any performance issue to be overcome. In that case, the all-Ethernet data plane model might just work, excluding possibly close-in superfast SSD. Quite probably, the interfaces will evolve functionality in radically different ways before things settle down.

All this means Ethernet drives should find a place in mainstream storage in a few years. In a way, they are tied tightly to the acceptance and success of software-defined storage.

About the Author

You May Also Like