How to Get Your Data Center Ready for 100GHow to Get Your Data Center Ready for 100G

To meet increasing consumer and enterprise demand for data (especially from video streaming and IoT devices), data centers must upgrade their infrastructure.

April 25, 2019

Today, the focus for many data centers is accommodating 100 Gbps speeds: 28 percent of enterprise data centers have already begun their migration.

Here are three considerations to guide upgrade projects that take into consideration the current and future states of your data center.

1) Understand your options for 100G links

Understanding the options for Layer 0 (physical infrastructure) and what each can do will help you determine which best matches your needs and fits your budget.

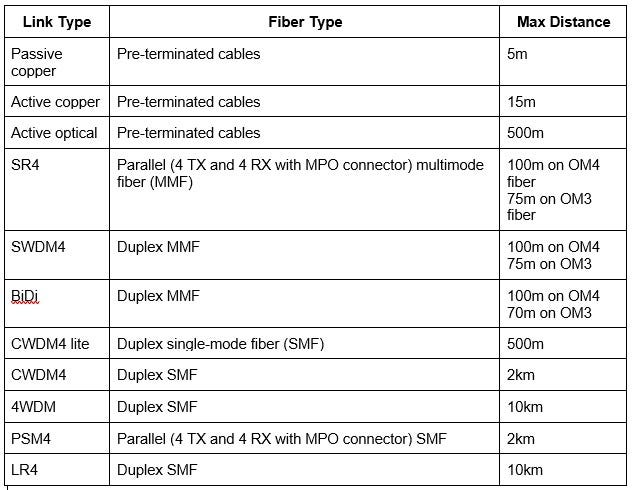

Here are several options:

20190425-100G.jpg

For example, if you’re at 10G right now and you have a fiber plant of OM3 with runs up to 65 meters, and you’re trying to move to 100G, you have two options (SWDM4 and BiDi) for staying with your legacy infrastructure.

On the other hand, if you’re at 10G and trying to get to 100G and you have a fiber plant of OM4 with many runs longer than 100 meters, you’ll need to upgrade these runs to single-mode fiber.

But there’s more.

For the longer runs, you have an option of using duplex or parallel SMF runs – which to choose? For “medium” length runs (greater than 100m but less than 500 meters), the extra cost of installing parallel vs. duplex SMF is moderate, while the savings in being able to use PSM4 optics instead of CWDM4 can be large (as much as 7x).

Bottom line: do your own cost analysis. And don’t forget to consider the future: parallel SMF has a less expensive upgrade path to 400G. Added bonus: the individual fiber pairs in parallel fibers can be separated in a patch panel for higher-density duplex fiber connections.

2) Consider your future needs before choosing new fiber

Again, it’s best to upgrade your data center with the future in mind. If you’re laying new fiber, be sure to consider which configuration will offer the most effective “future proofing.”

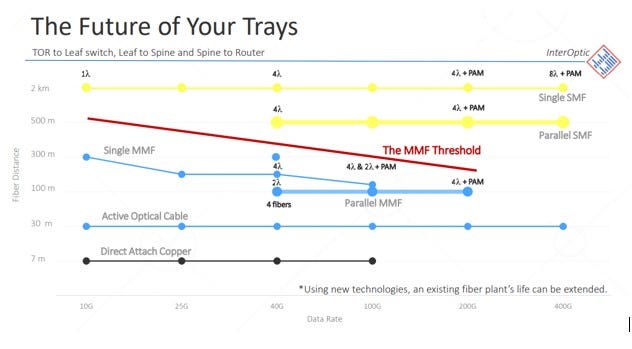

20190425-100G-trays.jpg

As you can see from the image above, for long runs you may be better off using parallel SMF. However, there’s a point at which the cost of extra fiber may outweigh the benefits to cheaper optics, so be sure to do the calculations for your data center.

And remember: planning for future needs is a business decision as much as a technical one, so you’ll want to consider questions like these:

How soon will you need to upgrade to 400G, based on elapsed time between when you upgraded from 1G to 10G and 10G to 100G?

Is upgrading to 100G capability right now the best move, given the planned direction of your business?

3) Consider the evolution of data center technology

Technology solutions get cheaper the longer they’re on the market. So if your data center can wait two to three years to make upgrades, then waiting may be the most cost-effective option.

For example, there’s a smaller form factor coming out soon. In the next two to three years, 100G will be moving to the SFP-DD form factor, which is higher density than QSFP-28, meaning you can get more ports in, which is good for tight server closets and those paying by the square foot for co-location.

SFP-DD ports are also backwards-compatible with SFP+ modules and cables, allowing users to upgrade at their own pace. So even if you’re not ready for all 100G ports, you can upgrade the switch but still use your existing 10G SFP+ devices until you need to upgrade them to 100G.

Proceed with caution

Upgrading a data center means managing a lot of moving pieces, so there’s plenty of room for things to go wrong. Consider this example: a data center manager noticed that his brand-new 25G copper links (server to switch) were performing poorly – dropping packets and losing the link.

Remote diagnostics showed no problems, so he decided to physically inspect the new installation. Since the last inspection, he saw the installers had used plastic cable ties to attach all the cables to the racks. This was fine for old, twisted pair cables, but the new 25G twinax copper cables are highly engineered and have strict specs on bend radius and crush pressure.

The tightly cinched cable ties bent the cables and put pressure on the jacketing, which actually changed the cables’ properties and caused intermittent errors. All the cables had to be thrown away and replaced – obviously, not a very cost-effective endeavor.

So, if you’re weighing your options, think through performance, cost, loss budgets, distance, and other features to consider as you upgrade your data center to 100G.

About the Author

You May Also Like