Understanding the Power Benefits of Data Processing UnitsUnderstanding the Power Benefits of Data Processing Units

The use of hardware acceleration in a DPU to offload processing-intensive tasks can greatly reduce power use, resulting in more efficient data center.

November 8, 2022

The data processing unit (DPU) is a relatively new technology that offloads processing-intensive tasks from the CPU onto a separate “card” in the server. I put “card” in quotes as that’s the form factor but in reality, a DPU is a mini server on board that’s highly optimized for network, storage, and management tasks. A general CPU on board a server was never designed for these types of intensive data center workloads and can often bog down a server.

A good analogy is to consider the role of a GPU. They handle all the graphics- and math-intensive tasks, enabling the CPU to handle the types of tasks it was designed to. A DPU plays a similar role with data center tasks. Despite the strong value proposition, there are still many skeptics of DPUs, primarily because they use more power than a regular network card, but that shows a lack of understanding of workloads.

Consider automobiles. If one were to ask someone, is a hybrid car or a gas-powered pickup truck more fuel efficient, everyone would say the hybrid car. What if a workload, such as transporting 10,000 pounds of gravel for 500 miles? In this case, the pickup would be much more efficient as it’s designed for that workload.

DPUs play a similar role, except no one has ever done the math to quantify it, at least not until now. Recently, DPU maker NVIDIA issued a DPU efficiency white paper and a summary blog that looks at the impact of its BlueField DPU on data center power consumption. NVIDIA isn't the only DPU manufacturer, but I believe it is the first that has quantified the impact on power.

The report does a nice job of setting the stage for future power requirements. NVIDIA used several independent studies to project how data center power will change. Currently, it's estimated that data centers consume about 1% of worldwide electricity. That number is 1.8% in the US and 2.7% in Europe. The best-case scenario is that this will rise to 3%, with a worst-case projection of 8%.

At the same time, Power Usage Effectiveness (PUE) ratios, which is a measure of power efficiency (the lower the number, the better), has been flatlining in the last few years. In 2007, PUE rations were in the 2.5 range. This dropped to 1.98 in 2011 and then 1.65 in 2013. Since then, traditional methods of optimizing power, such as maximizing workloads, improving cooling, and other initiatives, have seen little improvement, and PUE ratios have seen minimal gains.

This is where the use of DPUs can have a big impact. Today’s data centers rely heavily on software-defined infrastructure to increase agility, scale, and manageability. For servers, virtualization, networking, storage, security, and management are executed on virtual machines (VMs), containers, or agents that run on the main CPU. These processes can chew up 30% or more of a CPU. As previously mentioned, CPUs are not ideally suited for these types of workloads. General purpose CPUs are optimized to execute single-threaded workloads fast and not for power efficiency.

Conversely, DPUs have dedicated hardware engines to accelerate networking, data encryption/decryption, key management, storage virtualization, and other tasks. Also, the Arm cores on DPUs are more power efficient than general-purpose CPUs and have a direct connection to the network pipeline. This is why DPUs handle tasks like telemetry, deep packet inspection, and other network services much better than CPUs. DPUs should be thought of as domain-specific processors like a GPU or an ASIC that are designed for security, storage, networking, and other infrastructure-heavy tasks.

The theory behind why DPUs are more power efficient is solid, but NVIDIA supported the thesis with several use cases.

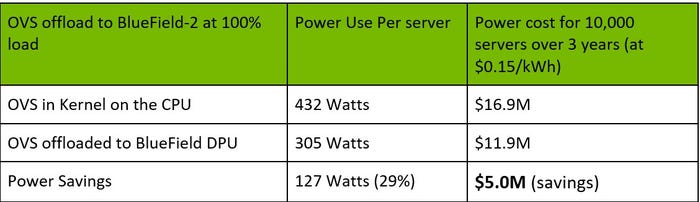

One use case was an Open vSwitch (OVS) network offload with a North American wireless operator. OVS is a widely deployed open-source tool used for software-defined networking (SDN). Normally, OVS runs on the server's x86 CPU as OS kernel software but can be offloaded to the networking accelerator on the BlueField DPU. In this test, the workload varied from 0% to 100%, and NVIDIA compared the power consumption between running OVS in the kernel (on the CPU) and offloading OVS to the DPU.

As the table below shows, the DPU offload reduced power consumption up to 29% (127W) at 100% workload because the BlueField DPU is both faster and more power efficient than the x86 CPU at processing OVS SDN tasks.

DPU 1.jpg

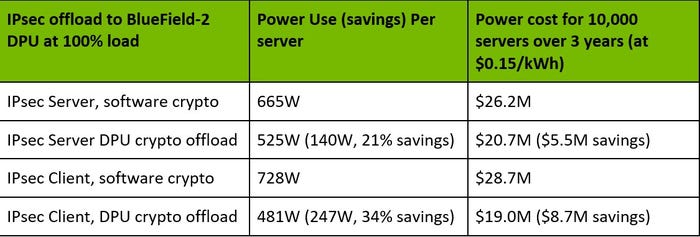

In another test, NVIDIA compared the power consumption while running network traffic encrypted using IPsec. IPsec encrypts (and decrypts) network traffic at Layer 3 and is extremely processing intensive. I recall, as a network engineer, we would often turn IPsec off at peak times as the servers would struggle to perform. Using encryption on every network link is increasingly a requirement in data centers because it is part of a zero-trust security stance, protecting data even if some other server or application in the data center is compromised by a cybersecurity adversary. A DPU with appropriate offloads can perform IPsec encryption and decryption faster and with less power consumption than a standard CPU.The table below shows comparing the power consumption of IPsec-encrypted traffic using the CPU (IPsec in software) versus the DPU (IPsec accelerated in DPU hardware) showed a 21% power savings (140W per server) for the server and 34% power savings (247W per server) for the client.

DPU 2.jpg

The report goes through a number of other use cases, including a 5G deployment and implementation of vSphere, and they both show significant energy savings, which translates into money savings by offloading processing-intensive tasks to a DPU. It's important to note that the higher the power costs, the better the savings. The baseline for this report was 15 cents per kWh. This power cost number is over 2x this in parts of Europe such as the UK (29.8 cents), Spain (30.6 cents), and Germany (32 cents).

The use of hardware acceleration in a DPU to offload processing-intensive tasks can greatly reduce power use, resulting in more efficient or, in some cases, fewer servers, a more efficient data center, and significant cost savings from reduced electricity consumption and reduced cooling loads.

Zeus Kerravala is the founder and principal analyst with ZK Research.

(Read his other Network Computing articles here.)

Related articles:

About the Author

You May Also Like