Is Your Infrastructure Ready? What You Need to Know Ahead of an AI InvestmentIs Your Infrastructure Ready? What You Need to Know Ahead of an AI Investment

Modernizing the enterprise architecture is a critical path on the journey to becoming a digital, AI, and data-driven business.

November 21, 2023

There are two important trends driving infrastructure changes that will impact the results of your AI investment.

In years gone by, we saw companies rush to cloud for ability and cost savings, lured by the frenetic news that cloud was going to change everything. It did, but it took a toll on many who failed to consider the changes being wrought in everything from compute to infrastructure to app services.

Today, the siren song of AI is attracting many who want to be “in before” the benefits start rolling in.

But let's learn from history this time. There are skills, technologies, and practices that need to be put in place to successfully see a return on your AI investment. The AI tech stack is still forming, but it is rapidly shaping up to include a broad range of technologies and capabilities that many organizations today do not possess.

ai-infrastructure.jpg

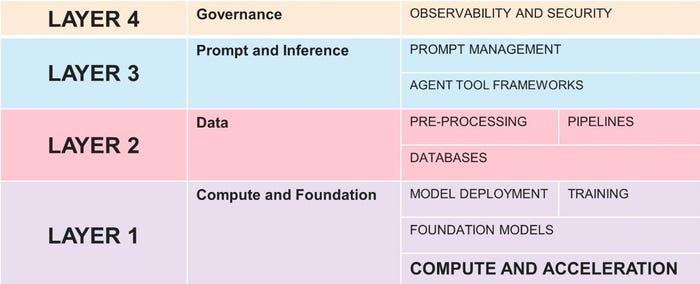

There are several different versions of this stack, but most share this simple structure and nearly all of the components. And one of them, you'll note, is at the very bottom, almost like it’s foundational.

And in that layer – compute and foundation – you’ll note that what’s holding up the entire AI stack is, unsurprisingly, containers and hardware.

It is here that we see two key trends accelerating change that need to be considered – and likely incorporated into your own tech stack – before you sign that AI investment check.

#1 Compute Platform

If you didn't see this one coming, it's time to glance up and take a good look at the workloads deployed in Kubernetes across core, cloud, and edge.

Dynatrace did just that and has uncovered that the once “application-centered” environment now hosts more auxiliary (63%) than application workloads (37%). And what are those auxiliary workloads? Why, according to PepperData it’s workloads that address the growing need for data-related capabilities: Data Ingestion, Cleansing, and Analytics (61%), Databases (59%), Microservices (57%), and AI/ML (54%).

Anecdotally, we're also seeing the shift from Kubernetes as primarily app-oriented infrastructure to compute infrastructure in the field. As Kubernetes has matured, its ability to support a more robust set of workloads has also matured, and containers stand ready to replace virtual machines as the platform of choice for delivering modern apps, data, and AI/ML workloads.

So, how's your container-fu? Do you have the practices, processes, and skills necessary to operate containers as a compute platform? If not, you might want to direct some of that AI budget to getting that in order.

#2 Compute Hardware

Anyone who’s attempted to run an LLM locally understands that the compute requirements can be overwhelming. Between memory and CPU needs, AI/ML has exponentially increased the upper bounds of the compute needed simply to run an AI/ML model, let alone train it.

This is a reflection of the increased complexity that tasks models are asked to perform and the greater availability of data. The increase in demand is reflected in the number of parameters of large language and multimodal models according to the Stanford University 2023 AI Index:

“Over time, the number of parameters of newly released large language and multimodal models has massively increased. For example, GPT-2, which was the first large language and multimodal model released in 2019, only had 1.5 billion parameters. PaLM, launched by Google in 2022, had 540 billion, nearly 360 times more than GPT-2.”

Stanford’s report is the most thorough I’ve read, and chapter two includes significant research and performance testing. The results? Moore’s Law, it turns out, isn’t dead after all. It’s merely turned its attention to GPUs: “the median FLOP/s per U.S. Dollar of GPUs in 2022 is 1.4 times greater than it was in 2021 and 5600 times greater than in 2003, showing a doubling in performance every 1.5 years.”

What this means for enterprises is that even the beefiest servers aren’t likely ‘good enough’ to run today’s models, let alone the models of tomorrow. It is time to turn some of that AI budget into modernizing the infrastructure and fattening up its compute capacity.

Tip of the Iceberg

Remember that compute platforms and hardware are at the very bottom of the AI stack. There’s more above it that needs attention, and ignoring things like data pipelines and practices, governance and security, familiarity with vector databases, and at least a passing thought for prompt management, AI investments will not see the return on investment that the 21% of CEOs who told KPMG they expect to see ROI for their generative AI investment in 1-3 years are likely to be disappointed.

When we laid out the blueprint for modernizing enterprise architecture for digital business, AI/ML was a key driver for the expansion of the data domain and why infrastructure and app delivery were broken out into their own domains. And, of course, it’s why observability and automation were new additions to the enterprise architecture model.

Modernizing the enterprise architecture is a critical path on the journey to becoming a digital – AI and data-driven – business.

And it all starts with ensuring you have a strong foundation, which – for enterprise IT - means compute infrastructure.

Related articles:

About the Author

You May Also Like