The AI Infrastructure Shift: Redefining Application DeliveryThe AI Infrastructure Shift: Redefining Application Delivery

To succeed today, businesses must adapt quickly, innovate relentlessly, and ensure that their AI infrastructure can perform in ways that were once unimaginable.

November 15, 2024

It has become obvious that this whole AI disruption is one of those disruptions. You know, the kind that doesn’t just change the way we do things and the applications we use to do it, like the pandemic. This is the kind that changes the very foundations of technology used to deliver and secure new types of applications and technologies. What’s needed is an AI infrastructure shift

Why? The way we used to build solutions, chose data centers, and architected networks grew out of key assumptions about IT infrastructure and application delivery that no longer hold true today.

Throughput Optimization: It was assumed that systems were optimized for throughput rather than latency or jitter, with a focus on high data transfer rates for use cases like video streaming, autonomous vehicles, and real-time gaming rather than real-time performance.

Homogeneous Clients and Consistent Latency: Clients were assumed to be homogeneous, with consistent latency expectations and limited security threats, typically from hacktivism, organized crime, or nation-state chaff, rather than more sophisticated or varied attack methods.

Simplified IT Infrastructure: The IT environment was assumed to be straightforward, with predictable application delivery and security requirements, where the adoption of hybrid IT estates and the complexity of generative AI workloads has created the need to support heterogeneous architectures and deployment models.

These assumptions were based on a simpler, more predictable landscape. However, with the rise of generative AI, the widespread adoption of hybrid IT environments (spanning public cloud, on-premises, and edge), and the increasing complexity of application delivery and security, these assumptions no longer hold true.

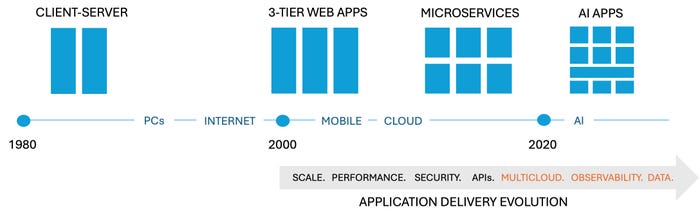

Implementing an AI Infrastructure to Keep Pace with App Changes

Today, we have traditional clients, mobile clients, IoT devices, AI agents, apps, and scripts all acting as legitimate clients to an application or API. Enterprises are citing "too many tools and APIs" as their number one challenge when managing multicloud estates, which complicates efforts to move toward more autonomous operations (AIOps). And with multimodal, non-deterministic input becoming the norm thanks to generative AI, network and application delivery now must balance throughput, latency, and jitter in real time—often without the visibility necessary to do so effectively.

Suffice it to say that we're trying to deliver and secure a new generation of applications with the latest generation of technology.

That means it’s time to seriously consider what changes are needed for application delivery to address a new application architecture, new performance expectations, and the need to operate seamlessly across all environments.

For application delivery, this means supporting multi-cloud networking, enabling real-time observability by generating telemetry on critical metrics like latency, throughput, and AI-specific performance, and expanding delivery and security capabilities to not only support traditional applications but also handle the large-scale data requirements of generative AI—such as the massive datasets required for model training and inference.

Recognizing the profound impact of AI as a disruptor of infrastructure is no small thing. It's clear that the landscape of application delivery and infrastructure is fundamentally changing. The assumptions that once guided how we built, deployed, and secured applications are no longer sufficient in the face of generative AI, hybrid IT estates, and the complex demands of modern enterprises. To stay ahead, businesses must embrace new paradigms—prioritizing multi-cloud flexibility, real-time observability, and the scalability required to handle the vast amounts of data and dynamic workloads AI demands.

A Final Word About the Need for a New AI Infrastructure

The rise of generative AI is not just a technological shift; it is a catalyst for rethinking how we approach application architecture, security, and performance. Just as the advent of the cloud redefined IT infrastructure a decade ago, AI is now pushing us to rethink our entire approach to application delivery, from the network edge to the data center.

In this new world, success will depend on the ability to adapt quickly, innovate relentlessly, and ensure that infrastructure can scale and perform in ways that were once unimaginable. The organizations that can successfully navigate this disruption will be the ones that lead the way into the next era of technology. The time to act is now—because the future is already here.

Read more about:

Infrastructure for AIAbout the Author

You May Also Like