Data Center Networking

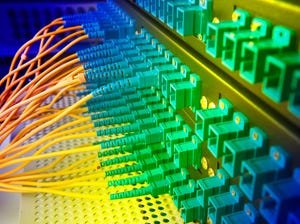

Data center networking is the networking infrastructure and technologies used to interconnect servers, storage systems, and other networking devices within a data center facility.

As demand for AI grows, enterprises are embracing Direct Liquid Cooling in their data centers to sustainably remain cool and run effectively.

Data Center Networking

Revolutionizing Data Centers: The Impact of Direct Liquid CoolingRevolutionizing Data Centers: The Impact of Direct Liquid Cooling

As AI workloads grow, enterprises are embracing Direct Liquid Cooling in their data centers to sustainably remain cool and run effectively.

SUBSCRIBE TO OUR NEWSLETTER

Stay informed! Sign up to get expert advice and insight delivered direct to your inbox